What Are Data Pipelines, Data Quality, and Data Governance? |

TL;DR A data pipeline moves data from source to destination. Data quality ensures the data is accurate, complete, and consistent during that journey. Data governance defines the rules, security, and compliance for handling that data. Together, they ensure your organisation receives trusted, timely, and compliant information for decision-making.

Why This Matters to Leaders in Healthcare, Government, FMCG, and Infrastructure

In the industries Notitia works with most, the stakes for getting data wrong are high:

- Healthcare – Incorrect patient or service data can delay care or skew funding allocations.

- Government – Non-compliant data handling can breach privacy laws and erode public trust.

- FMCG – Inaccurate supply chain data can cause costly overproduction or stockouts.

- Infrastructure – Poor project data can lead to mismanaged resources and budget blowouts.

A well-built data pipeline ensures data flows seamlessly. Adding data quality measures ensures the data itself is correct. Enforcing governance ensures it’s managed securely and in compliance with laws. Neglect any one of these, and you risk operational, financial, and reputational damage.

What is a Data Pipeline?

A data pipeline is a set of automated processes that moves data from one or more sources to a destination, often transforming it along the way.

Core stages of a data pipeline:

- Ingestion – Data is collected from databases, APIs, files, IoT devices, or applications.

- Processing & transformation – Data is cleaned, standardised, enriched, and structured.

- Storage – Data is placed into a warehouse, lake, or analytics platform.

- Consumption – Data is delivered to end-users via dashboards, applications, or reports.

Without quality and governance, pipelines can still deliver — but they may deliver bad data faster. That’s why these three areas must be addressed together.

Types of Data Pipelines

Different pipelines are designed for different business needs:

- Batch pipelines – Move data in scheduled intervals (e.g., monthly finance reports).

- Streaming pipelines – Move data continuously for real-time insights (e.g., live inventory tracking).

- Data integration pipelines – Merge data from multiple sources into a unified view.

- Cloud-native pipelines – Purpose-built for modern, scalable cloud platforms.

What is Data Quality in a Data Pipeline?

Data quality is about ensuring the information you use is accurate, complete, consistent, and reliable.

Inside a pipeline, quality is managed through:

- Validation rules – Checking values meet required standards before processing.

- Standardisation – Converting formats (e.g., dates, currencies) to be consistent.

- Deduplication – Removing duplicate entries that skew reports.

- Enrichment – Adding missing information from verified sources.

- Error handling – Flagging or correcting anomalies automatically.

Why it matters:

Poor quality data impacts decision-making. In healthcare, for example, a single incorrect code in patient data can affect reporting to funding bodies. In FMCG, an incorrect inventory figure can throw off supply chain forecasts.

What is Data Governance in a Data Pipeline?

Data governance is the framework of policies, standards, and controls that guide how data is managed across its lifecycle.

Within a data pipeline, governance includes:

- Role-based access controls – Only authorised users can view or modify sensitive data.

- Audit trails – Every change and movement of data is recorded.

- Data lineage – Documenting where the data came from and how it has been transformed.

- Compliance enforcement – Embedding rules to meet regulations like the Privacy Act or industry-specific standards.

Why it matters:

Strong governance protects sensitive data, prevents breaches, and ensures compliance. In government and health projects, it can be the difference between a successful audit and a costly fine.

How Data Pipelines, Data Quality, and Data Governance Work Together

Think of it like a water supply:

- The pipeline is the plumbing.

- Data quality is the filtration system that ensures the water is safe to drink.

- Data governance is the regulation that ensures water is distributed fairly and safely.

Without quality, the pipeline might deliver contaminated data. Without governance, even clean data might be misused or accessed inappropriately.

When combined, they:

- Deliver trusted, timely insights for decision-making

- Reduce compliance risks

- Improve operational efficiency by removing rework

- Build confidence in reporting across the organisation

Best Practices for Building Reliable Data Pipelines

- Define clear ownership and accountability.

- Automate quality checks and error handling.

- Build with scalability in mind — batch today, streaming tomorrow.

- Monitor pipelines with real-time observability tools.

- Align pipeline design with governance and compliance requirements.

Best practice for leaders

To integrate quality and governance into your data pipelines:

- Design for quality from the start – Embed validation and standardisation in the pipeline’s architecture.

- Automate wherever possible – Reduce manual checks by building rules into the pipeline.

- Document lineage and transformations – Transparency builds trust and speeds up audits.

- Enforce role-based access controls – Protect sensitive information without blocking access to what users need.

- Continuously monitor and improve – Pipelines, rules, and data needs evolve — governance and quality measures should too.

Emerging Trends in Data Pipelines

- AI and Machine Learning – real-time pipelines feed predictive models and generative AI.

- Data Mesh Architectures – decentralising pipelines across domains empowers teams and avoids bottlenecks.

- Data Observability – monitoring lineage, anomalies, and health ensures trusted delivery.

- Privacy and Security – with regulations like Australia’s SOCI Act, privacy-by-design is now essential.

Frequently Asked Questions

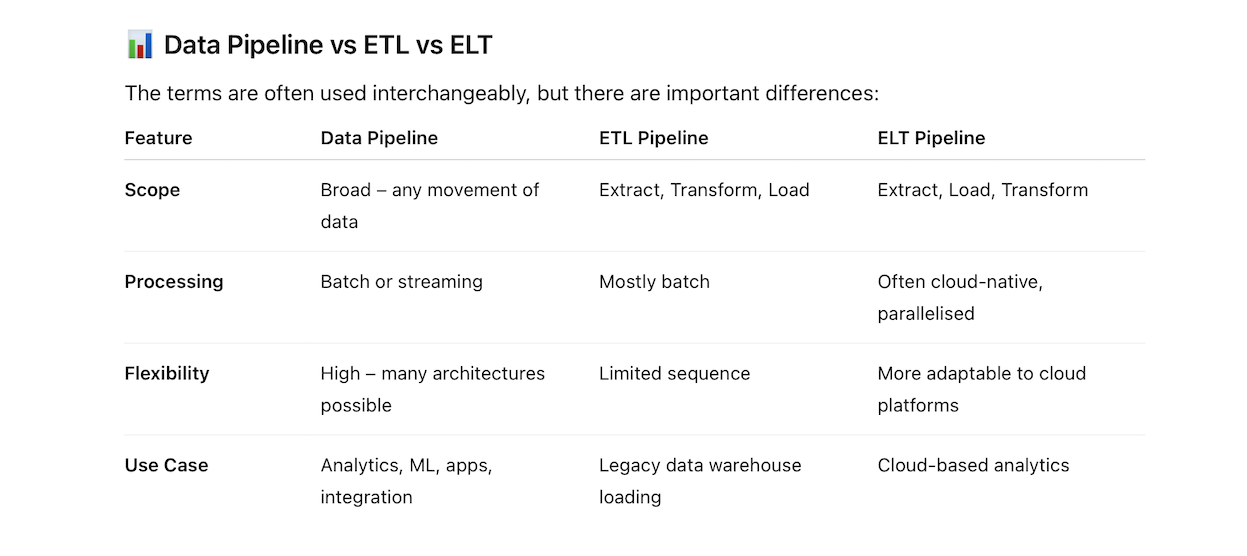

What’s the difference between data pipelines and ETL pipelines?

ETL pipelines are one type of data pipeline where transformation happens before data is stored. Data pipelines can be ETL, ELT, batch, streaming, or hybrid.

What’s the difference between batch and streaming pipelines?

Batch pipelines move data in scheduled intervals, while streaming pipelines process data continuously for real-time use cases.

How does data quality affect analytics?

Poor data quality leads to incorrect insights, which can result in bad business decisions.

Is data governance only for compliance?

No. While compliance is a driver, governance also improves efficiency, reduces errors, and increases stakeholder trust.

What tools help manage quality and governance in pipelines?

Platforms like Qlik Cloud Analytics, Snowflake, and Apache Airflow can integrate quality checks and governance policies directly into pipelines.

Data pipelines move information, but data quality ensures it’s correct, and data governance ensures it’s used appropriately. Together, they form the backbone of a data strategy that is not only efficient but also trustworthy and compliant.

If you want a pipeline that’s built for speed and trust, speak to Notitia about designing a solution that integrates quality and governance from day one.